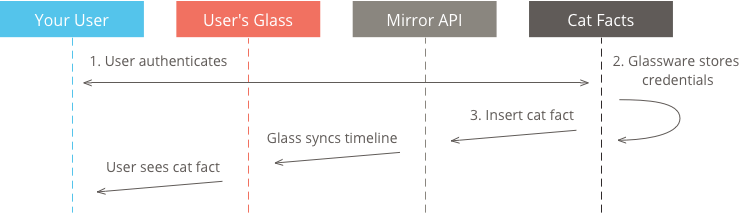

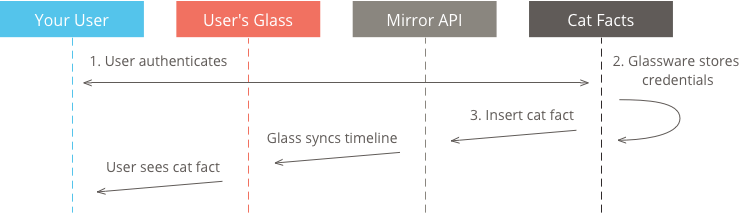

Google Glass has arguably become the most talked about use case for embedded Android to date. The ambitious hardware project is powered by Android 4.0.3 – Ice Cream Sandwich. Until recently however, developers were only able to build apps for Glass using the Mirror API which basically exposes web applications to Glass but doesn’t really install an Android application on the device itself.

The Mirror API allows you to build web based services that you can make Glass aware of. Glass will then retrieve HTML/JSON updates from your web service to display content on the screen. It’s hard to classify that as actual embedded Android development.

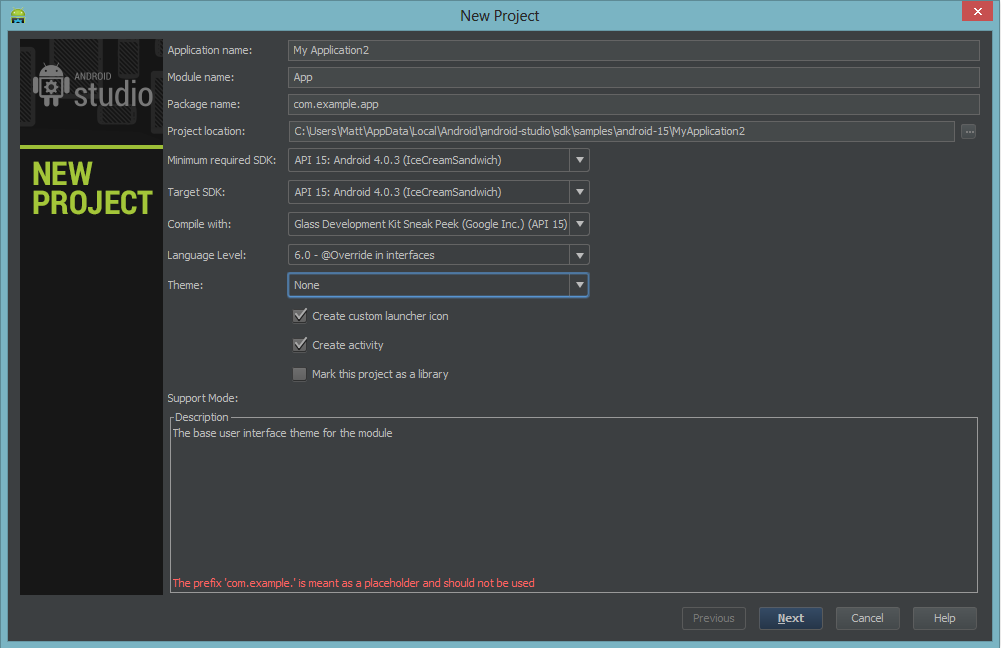

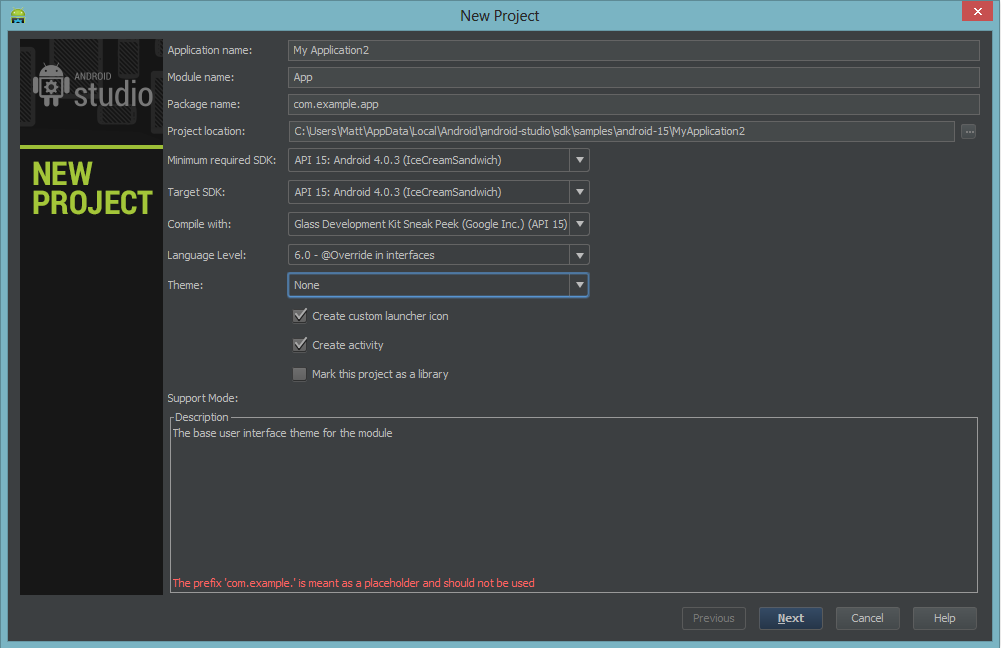

Earlier this month, Google announced a Glass Development Kit (GDK) that enables developers to build more traditional Android applications and install the APK directly onto Glass. The preview release of the GDK is available today and it opens all kinds of doors into Glass development and offers exciting potential for new types of embedded Android use cases.

So what are the new capabilities made available in the Glass Development Kit you might ask? In short, everything you’ve been waiting for. It’s such an improvement and drastic change that Google shows the GDK and Mirror API as two entirely different forms of development for Glass. Sure they say you can use them both at once, but why bother?

Glass apps developed with the GDK can be installed straight onto the hardware. This opens up the ability to build offline apps – apps which do not require a network connection to run. In addition, the app can respond in real time to user interaction and, likewise, can continuously process data and provide output/feedback based on the user’s surroundings. This is thanks to the fact that you can directly access the Glass hardware with the GDK rather than rely on web service calls for everything. The result is a vastly improved user experience. A major complaint of the Mirror API is that it was very sluggish to respond to events and provide updates. The GDK helps solve that problem.

Highlights Of The GDK Functionality

User Interface

– The Glass cards can now be generated from code with the following components:

- Main body text

- Left-aligned footer

- Right-aligned footer for a timestamp

- One image for the card’s background or multiple images displayed on the left side of the card

– You can create live cards with low or high frequency rendering. Low frequency rendering allows you to draw a card using a subset of the Android Layout controls. These controls include:

– If your app needs real time graphical updates or elements not included in the Low Frequency layout options, you can choose a High Frequency layout. In a High Frequency layout, you can draw directly on the card’s canvas using the full Android drawing library. Using High Frequency live cards, you can create anything from 3D games to augmented reality software.

– You can now create Immersions. Immersions are equivalent to normal Android activities. That means you get what amounts to the full capability of an Android application in your Glass app including greater flexibility in consuming user input and producing user interfaces.

Hardware Interface

– Touch gestures are now accessible directly. You can attach a touch event listener and gain access to the raw touchpad data. You can even set view specific touch event listeners so that you can have your app react differently depending on what screen the user is viewing.

Examples of the touch events you can recognize include Tap, Double Tap, Swipe Left, Swipe Right, and more.

– Voice input for your app is now available in two ways. In the first exposure, apps can register to be launched by listening to one of the 17 pre-defined commands available from the MyGlass screen along with your app name. For example: “OK glass, take a note with.. __App name__”. If your app doesn’t fit into one of the 17 commands, you can make a request to Google to add a new phrase.

The second way you can interact with voice commands is by tapping into the speech recognition activity. Your app can trigger the speech listener which will wait for the user to speak. The audio will be converted into text which will be returned to your application to act upon.

– Location and Sensor data is now available directly from the hardware. Using the standard Android API calls, you can access the raw data and use it as desired. Retrieving the GPS coordinates has always been possible with glass, but now you’re able to access the information from a variety of location providers (GPS, Network, etc.).

The sensor data is more interesting. You’re given access to the following sensor data:

These sensors allow for precise 6 axis motion tracking, even head tracking, which has limitless potential for physical activity based software. The light sensor and and magnetic field sensor also open up some interesting possibilities.

Notable absent from the list of available sensor data are:

– The camera hardware is now fully accessible via the Android camera API. You can fully program your own camera logic as desired, which is vastly more flexible that simply starting the stock camera intent and snapping a photo.

Shaping The Future Of Embedded Android

Aside from the expanded functionality of the Glass hardware, the improved toolset and new compile target (Glass Development Kit API 15) is a major boost to the viability of Android as an embedded operating system. The fact that Google itself is developing and releasing SDK’s and specific build targets made purposefully for embedded use is huge. It’s clear that the sector is on the rise and that more systems are going to begin taking advantage of what Android has to offer for embedded use.

Wearable computers are likely to be the largest consumerization of embedded Android in history. Making an operating system ubiquitous by having it upfront and present at all times is going to enable a new breed of application not previously explored. As the technology matures, applications will react to your environment without users having to launch or ask for anything, the device will simply sense, adapt, and offer assistance.

This is just the beginning of what’s to come for embedded Android, but Glass shows us what’s already possible with today’s low power but capable processors. A few years ago it would not have been feasible to fit a CPU powerful enough to run Android inside of a pair of glasses. While the current iteration isn’t exactly polished, and the headgear gets quite hot, it’s a much more realistic attempt than others have been able to pull off with wearable computing. Will Glass take off and become mainstream hardware? It’s hard to say at this point. One thing is for sure though, it’s thrusting our society in that direction and shrinking the timeline to mass adoption unlike any other effort.

As the hardware progresses, so too will the software and tools used to program that hardware. Google is now further into the embedded Android business than it has ever been. With the developer of the operating system working to improve its performance and functionality for embedded use on its own, other companies focused on embedded software are going to benefit greatly. Hurdles of embedded Android use, which have been present since the beginning, will slowly be removed resulting in fewer specialized builds of Android and greater standardization, stability, and viability for the embedded community.

(Do you find this article interesting? You may want to check out our Embedded Android pages to read more about what we do in this space.)

Product Engineering Services Customized software development services for diverse domains

Product Engineering Services Customized software development services for diverse domains

Sustenance Engineering Going beyond maintenance to prolong life of mature products

Sustenance Engineering Going beyond maintenance to prolong life of mature products

Managed Services Achieve scalability, operational efficiency and business continuity

Managed Services Achieve scalability, operational efficiency and business continuity

Technology Consulting & Architecture Leverage the extensive knowledge of our Domain Experts

Technology Consulting & Architecture Leverage the extensive knowledge of our Domain Experts